Did You Know: FTC Proposes New AI Protections

The Federal Trade Commission is seeking public comment on a proposed rule that would prohibit the impersonation of individuals, according to a media release. The proposed rule changes would extend protections of the new rule on government and business impersonation that is being finalized by the Commission.

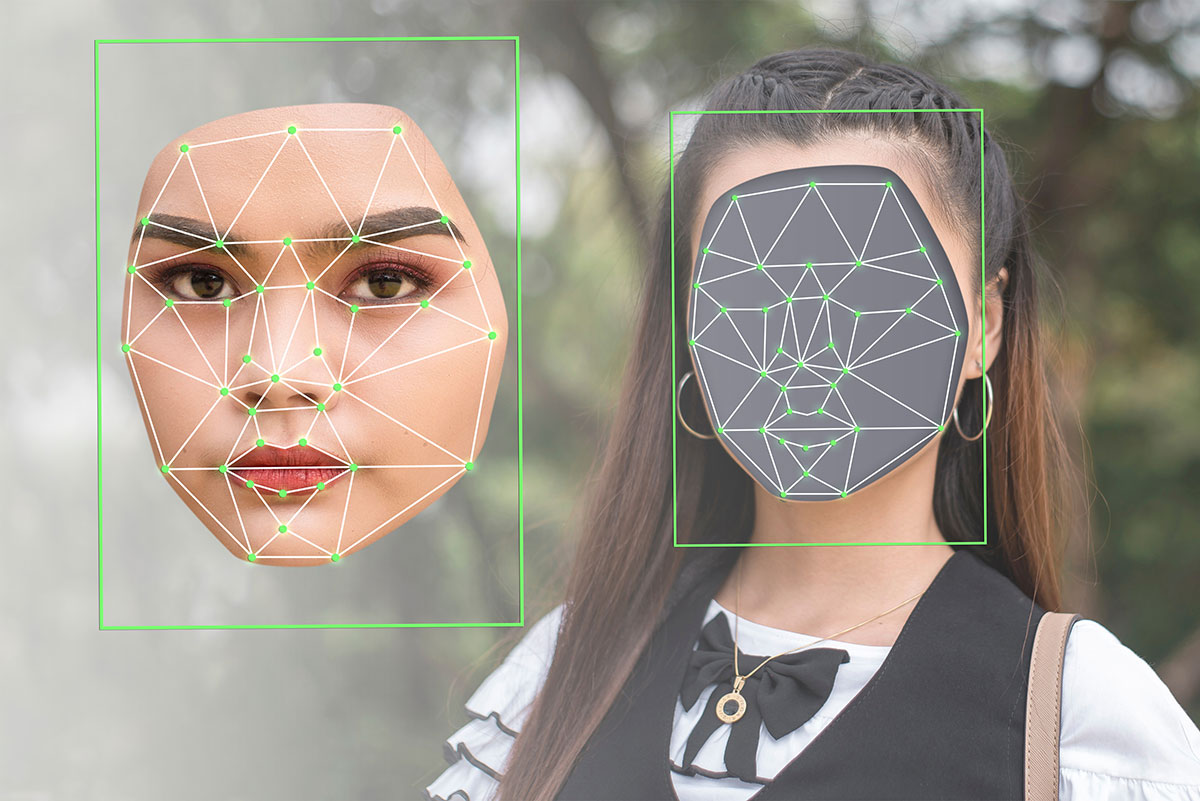

The agency is taking this action in light of a surge in complaints around impersonation fraud, as well as public outcry about the harms caused to consumers and to impersonated individuals. Emerging technology – including AI-generated deepfakes – threatens to turbocharge this scourge, and the FTC is committed to using all of its tools to detect, deter, and halt impersonation fraud, the release states.

The Commission is also seeking comment on whether the revised rule should declare it unlawful for a firm, such as an AI platform that creates images, video or text, to provide goods or services that they know or have reason to know is being used to harm consumers through impersonation.

“Fraudsters are using AI tools to impersonate individuals with eerie precision and at a much wider scale. With voice cloning and other AI-driven scams on the rise, protecting Americans from impersonator fraud is more critical than ever,” said FTC Chair Lina M. Khan in the release. “Our proposed expansions to the final impersonation rule would do just that, strengthening the FTC’s toolkit to address AI-enabled scams impersonating individuals.”

As scammers find new ways to defraud consumers, including through AI-generated deepfakes, this proposal will help the agency deter fraud and secure redress for harmed consumers.

Government and business impersonation scams have cost consumers billions of dollars in recent years, and both categories saw significant increases in reports to the FTC in 2023. The rule authorizes the agency to fight these scams more effectively.